Elon Musk, Stephen Hawking warn of artificial intelligence dangers

By Adario Strange From Mashable

By Adario Strange From Mashable

Call it preemptive extinction panic, smart people buying into Sci-Fi hype or simply a prudent stance on a possible future issue, but the fear around artificial intelligence is increasingly gaining traction among those with credentials to back up the distress.

The latest example of this AI agita manifesting into real world action comes in the form of an open letter calling for safety measures to be instituted that was posted online Sunday and signed by none other than Tesla’s Elon Musk and famed theoretical physicist Stephen Hawking.

Based on comments made at Tuesday’s Automotive News World Congress in Detroit, Musk’s concerns about autonomous systems extend to cars as well.

“People should be concerned about safety with autonomous vehicles,” Musk said, according to a report in The Verge. “The standard for safety should be much higher for self-driving cars.”

But that’s about as far as Musk went with his AI concerns at the event, although his earlier comments on the topic are far more colorful.

Others digitally signing their names to the AI safety document include noted singularity theorist and science fiction author Verno Vinge, Lucasfilm’s John Gaeta (one of the people behind the special effects in the AI apocalypse film The Matrix), researchers from Oxford, Cambridge, MIT and Stanford as well as executives from Google, Microsoft and Amazon.

Titled “Research Priorities for Robust and Beneficial Artificial Intelligence: an Open Letter,” the missive lays out an exceedingly calm and conservative approach to the topic, with no direct mention of AI going rogue — at least, not at first. But dig deeper, inside the attached “research priorities document,” and that’s where you’ll find the real fears behind the open letter outlined a bit more clearly.

In a section listed under “control,” the document states:

For certain types of safety-critical AI systems — especially vehicles and weapons platforms — it may be desirable to retain some form of meaningful human control, whether this means a human in the loop, on the loop, or some other protocol. In any of these cases, there will be technical work needed in order to ensure that meaningful human control is maintained.

Later, the document recommends controls for AI, which begins to hint at the meaty stuff (i.e. AI running amok):

In particular, if the problems of validity and control are not solved, it may be useful to create “containers” for AI systems that could have undesirable behaviors and consequences in less controlled environments. Both theoretical and practical sides of this question warrant investigation.

But the most direct connection to artificial intelligence taking over comes in the document’s reference to a study titled “Stanford’s One-Hundred Year Study of Artificial Intelligence.”

Stanford’s One-Hundred Year Study of Artificial Intelligence includes “Loss of Control of AI systems” as an area of study, specifically highlighting concerns over the possibility that “we could one day lose control of AI systems via the rise of superintelligences that do not act in accordance with human wishes – and that such powerful systems would threaten humanity.”

Hosted by The Future of Life Institute, a nonprofit organization led by Skype co-founder Jaan Tallinn and professors and students from MIT, Harvard,UC Santa Cruz and Boston University, the group describes its mission as supporting “research and initiatives for safeguarding life” and developing “ways for humanity to steer its own course considering new technologies and challenges.”

However, history doesn’t always neatly fit into our forecasts. If things continue as they have with brain-to-machine interfaces becoming ever more common, we’re just as likely to have to confront the issue of enhanced humans (digitally, mechanically and/or chemically) long before AI comes close to sentience.

Still, whether or not you believe computers will one day be powerful enough to go off and find their own paths, which may conflict with humanity’s, the very fact that so many intelligent people feel the issue is worth a public stance should be enough to grab your attention.

Now let’s hope this early warning open letter turns out to be unnecessary, because becoming a pet human probably won’t be much fun.

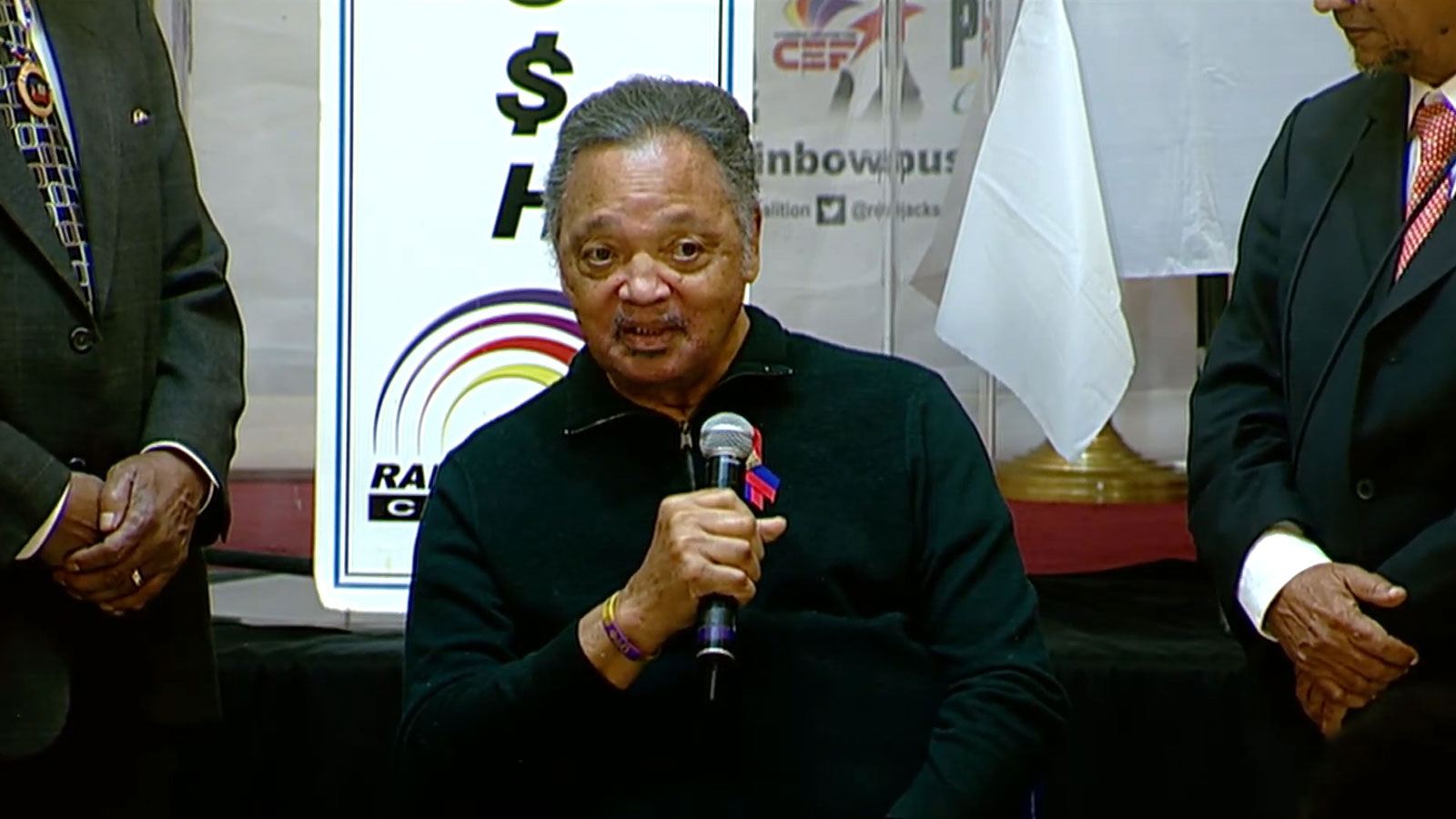

IMAGE: Elon Musk, Tesla Chairman, speaks at the Automotive News World Congress in Detroit, Tuesday, Jan. 13, 2015. IMAGE: PAUL SANCYA/ASSOCIATED PRESS

For more on this story go to: http://mashable.com/2015/01/13/elon-musk-stephen-hawking-artificial-intelligence/?utm_campaign=Feed%3A+Mashable+%28Mashable%29&utm_cid=Mash-Prod-RSS-Feedburner-All-Partial&utm_medium=feed&utm_source=feedburner&utm_content=Google+Feedfetcher